Currently there are two main options for designing a chatbot in Copilot Studio:

- Using a classic approach so utilizing trigger phrases to invoke topics, adding actions to the topic and using entities to extract information from the user etc.

- Using generative orchestration – the model is responsible for choosing what topic to trigger, what action to invoke, etc.

What are pros and cons of each one and how to decide which one to use? Let’s see!

Classic approach

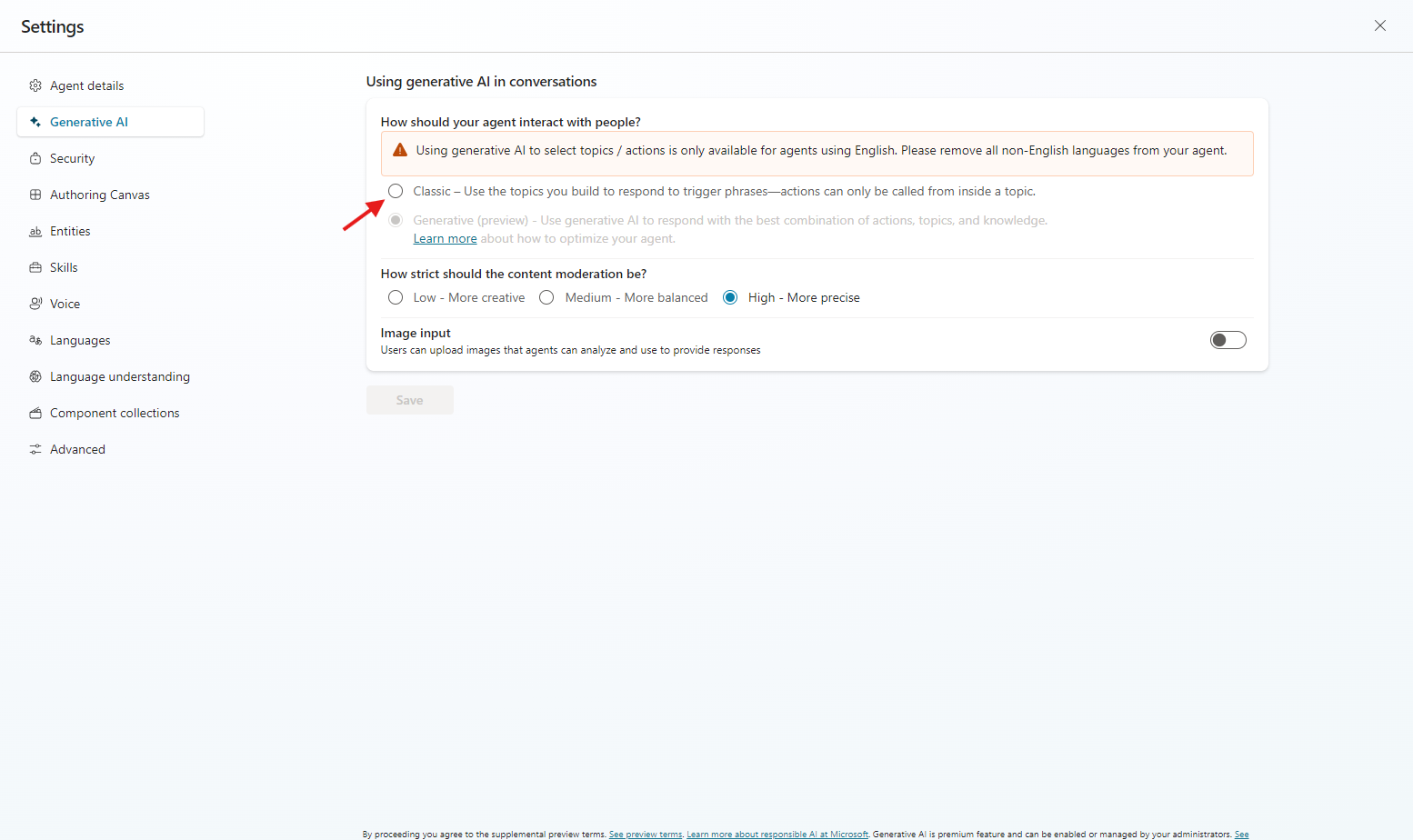

In the classic approach in chatbot settings we need to choose the Classic option:

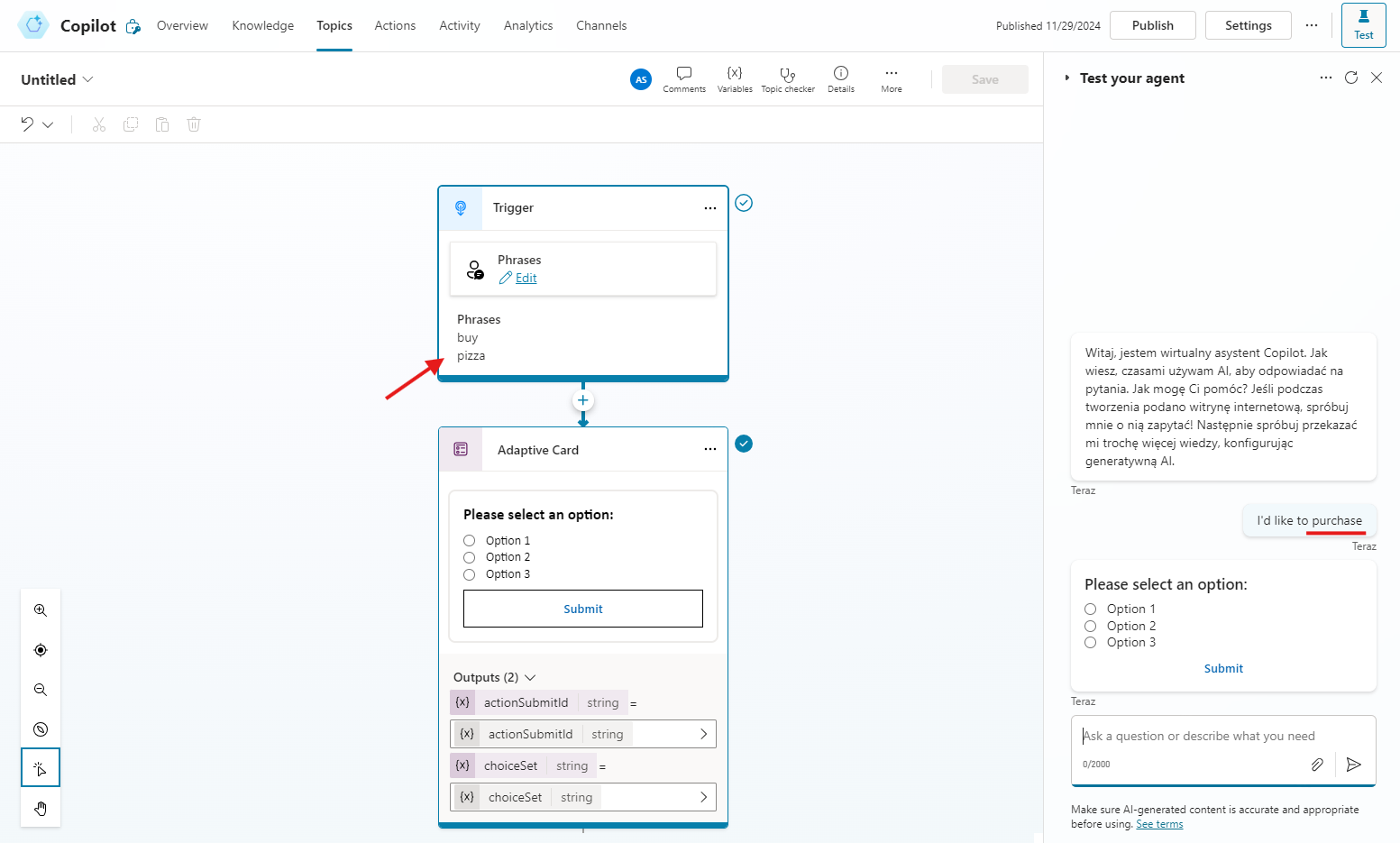

When applied, our chatbot will use the classic way of triggering topics. For each topic we need to define so-called trigger phrases – words which will invoke the topic, when the user writes about something:

Just as an example – we have defined a topic with trigger phrases: pizza, buy.

User says: I’d like to buy a pizza.

Bot invokes the topic and performs all the actions contained in it.

The NLP model behind the chat is also able to find synonyms. In the example below I’m using a synonym for the word buy – purchase:

As you can see it’s still able to invoke the topic, even though I’m not including the word in my message. Actions like invoking a Power Automate flow or connector action need to be added to the topic flow – otherwise they won’t be invoked.

What are pros and cons of this approach?

| Pros | Cons |

|---|---|

| the outcome of invoking the topics is stable | the conversation with the user is artificial – the same responses each time |

| you have more control over what will be used and what will not | sometimes it’s hard to extract relevant information from the user (see: entities) |

| it’s quite straightforward to design the chatbot | preparing trigger phrases could be hard in some cases |

Generative orchestration

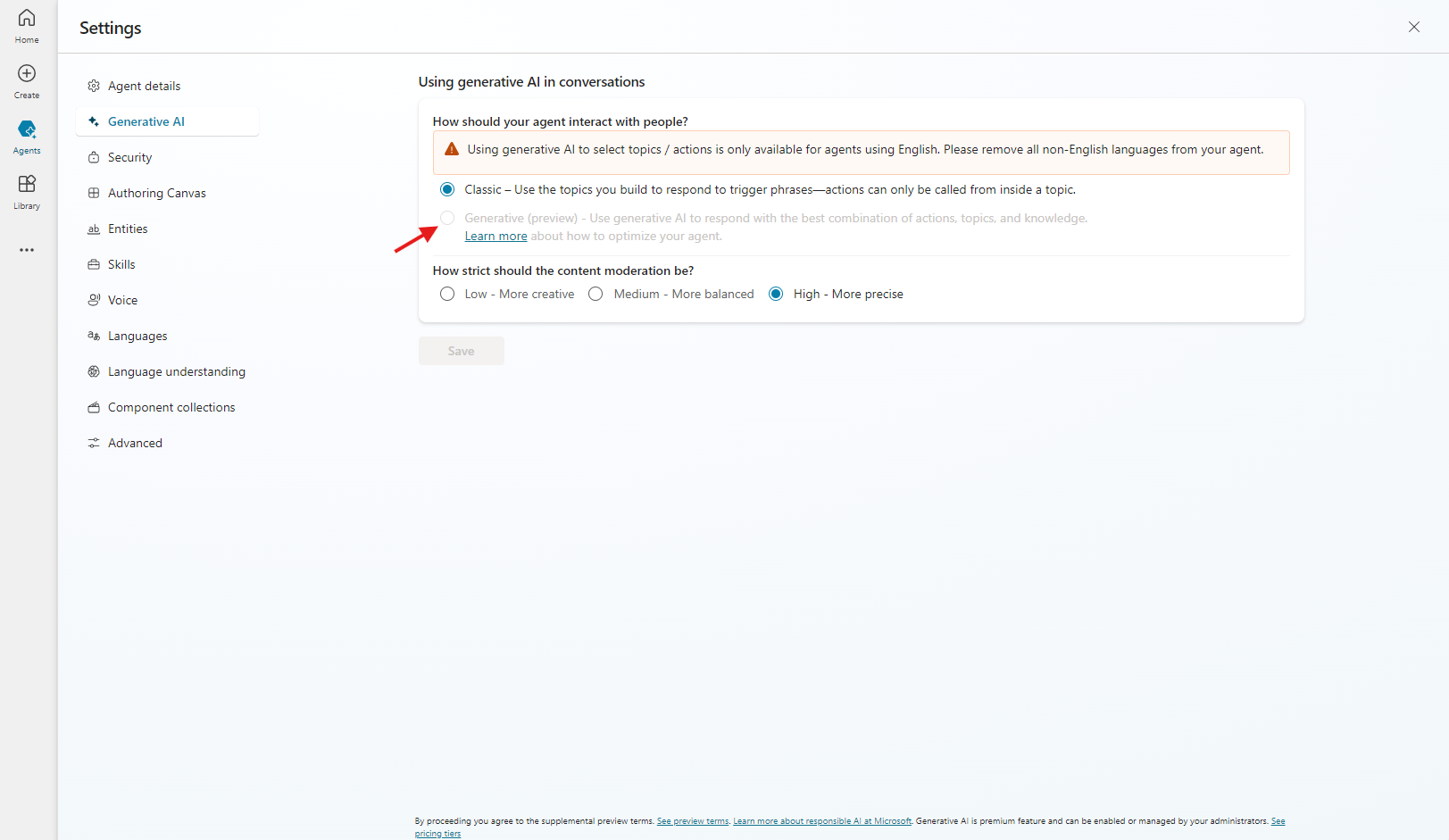

In the generative orchestration approach GPT model behind decides which topic or action to invoke and how to respond to the user. In the chatbot settings, we need to choose the Generative (preview):

Switching the settings changes a lot of things in chatbot design:

- Invoking a topic is based on its description, not on

trigger phrases. - You can define an action for the chatbot, such as get items from a Sharepoint list, for example, and inputs/outputs of this action can be filled by the model automatically.

- When testing the chatbot, it shows a

conversation map, which contains information about what decisions the model has taken to answer the user.

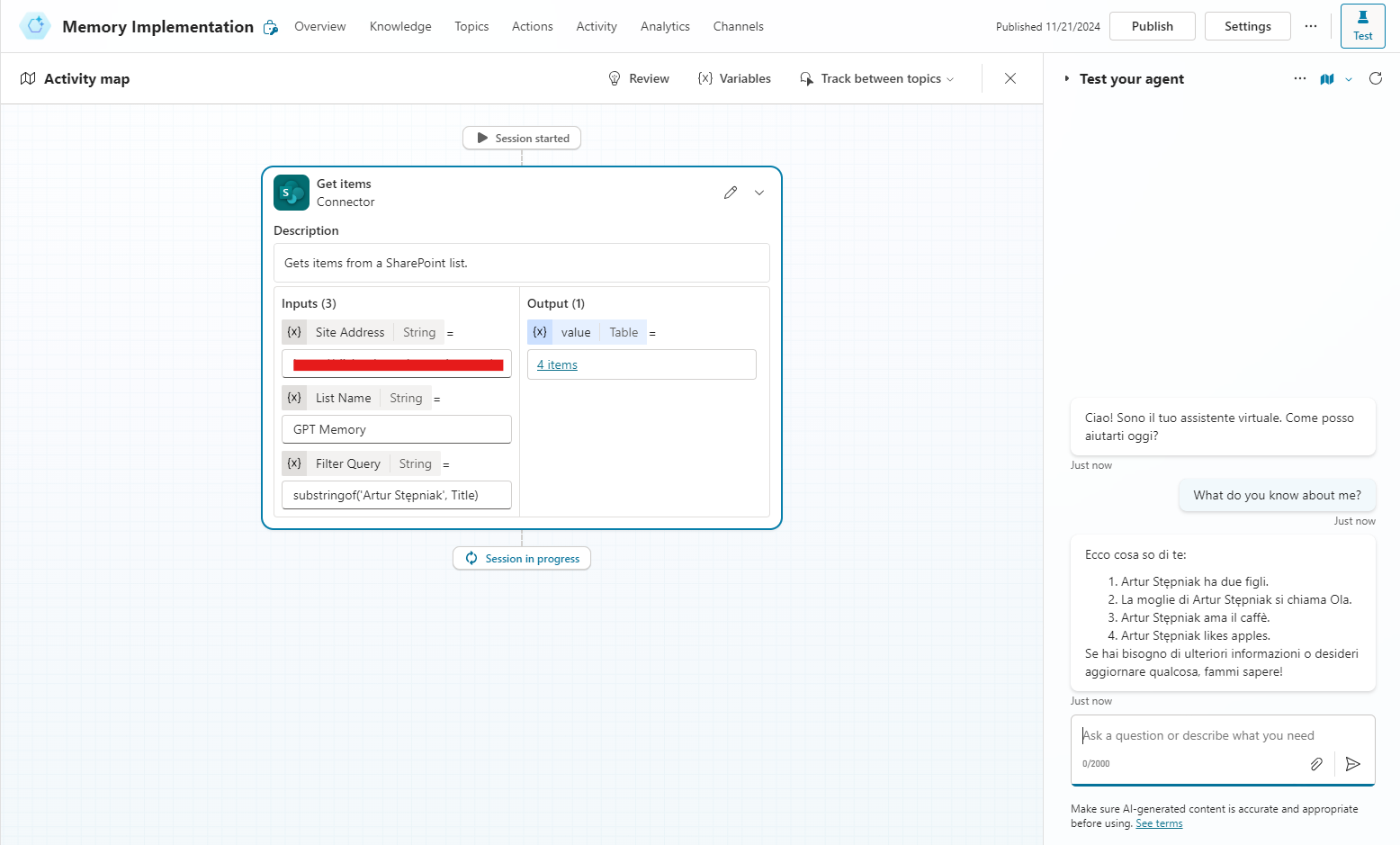

The conversation with the chatbot starts to be more natural and straightforward. With the help of AI, you can prepare the inputs of an action without the need to fill them manually – by combining Instructions with relevant input parameters descriptions the model can easily invoke an action and return appropriate response. Here you can see an example of a chatbot, which works on a Sharepoint list as its memory:

The site address and list name were provided manually. It was able to define the ODATA filter query by itself, it only needed a bit of tweaking in the Instructions like:

- you should only use the columns that are available to fill for your memory,

- you should use substringof() for the filter,

etc.

That way you can design a chatbot which can communicate with various services and respond to the user with the data that it retrieved. The conversation is also more straightforward and it feels more natural to the user.

What are the pros and cons for this approach?

| Pros | Cons |

|---|---|

| the conversation feels natural = the user experience is better | the outcome of the conversation is more random |

| you don’t need to configure each thing as you can rely on the model base knowledge (e.g. ODATA filter preparation) | you need to test more to ensure that the outcome will be correct |

you can use the GPT model freely, without the need to implement Generative answer node in the topic | you need more time to prepare the descriptions for each action, and instructions for the model etc. |

Summary

Which way is better to prepare a chatbot? The answer depends on particular business case and requirements. Generative orchestration definitely has brighter future as it fully utilizes the GPT model’s capabilities, but it’s not always the best approach for every solution. If the outcome of a conversation needs to be always stable and predictable, without any exceptions, then it’s better to use the classic approach. However if you are able to accept some unpredictability in the responses and you would like to fully use the GPT model’s capabilities, then generative orchestration is the way to go. What we also need to consider is, as of today, generative orchestration is still a preview feature, so it’s not recommended by Microsoft for production workloads. To sum it up, I’ve prepared a table to illustrate the differences between each approach:

| Classic | Generative orchestration |

|---|---|

| uses NLP to invoke topics, run actions | uses GPT model to invoke topics, run actions |

| the conversation is predictable and can be defined by using conditional logic | the conversation is more random and the outcome sometimes could be a bit different than expected |

| you need to manually define each step that chatbot needs to perform to answer the user | the GPT model decides what step to take to answer the user, based on provided descriptions |

Did you like the article? Feel free to share your thoughts in the comments section!

Artur Stepniak

Useful links:

https://learn.microsoft.com/en-us/microsoft-copilot-studio/advanced-generative-actions

https://learn.microsoft.com/en-us/microsoft-copilot-studio/nlu-boost-node

https://learn.microsoft.com/en-us/microsoft-copilot-studio/advanced-entities-slot-filling

Leave a Reply